How to Learn Python (Step-by-Step) in 2024

Learning Python was hard for me, but it didn't have to be.

A decade ago, I was a fresh college grad armed with a history degree and not much else. Fast forward to today, and I'm a successful machine learning engineer, data science and deep learning consultant, and founder of Dataquest.

Currently, I am working on some deep learning projects, Marker and Surya. But let me be real with you—it wasn't all smooth sailing. My journey to learn Python was long, filled with setbacks, and often frustrating.

If I could turn back time, I'd do things much differently. This article will guide you through the steps to learning Python the right way. If I had this information when I started, it would have fast-tracked my career, saved thousands of hours of wasted time, and prevented much stress.

Read this article, bookmark it, and then read it again. It's the only Python learning guide you'll ever need.

Why Most New Learners Fail

Learning Python doesn't have to be difficult. If you’re using the right resources, it can be easy (and fun).

The Problem With Most Learning Resources

Many of the courses out there make learning Python more difficult than it has to be. Let me give you a personal example to illustrate my point.

When I first started learning Python, I wanted to do the things that excited me, like making websites or utilizing AI. Unfortunately, my course forced me to spend months on boring syntax. It was agony!

Throughout the course, Python code continued to look foreign and confusing. It was like an alien language. It’s no surprise I quickly lost interest.

Regrettably, most Python tutorials are very similar to this. They assume you need to learn all of Python syntax before you can start doing anything interesting. This is why most new learners give up.

An Easier Way

After many failed attempts, I found a process that worked better for me. I believe it's the best way to learn Python programming.

The process is simple. First, spend as little time as possible memorizing Python syntax. Then, take what you learn and dive headfirst into a project you actually find interesting.

This minimizes the time spent on mundane tasks and maximizes the fun parts of learning Python. Think analyzing data, building a website, or creating an autonomous drone with artificial intelligence!

This better way of learning is why I built Dataquest. Our courses will have you building projects immediately with minimal time spent doing the boring stuff.

But how do you execute this learning process? The following five steps will explain everything you need to know. Your journey to learn Python starts now.

Step 1: Identify What Motivates You

With the right motivation, anyone can become highly proficient in Python programming.

As a beginner, I struggled to keep myself awake when trying to memorize syntax. However, when I needed to apply Python fundamentals to build an interesting project, I happily stayed up all night to finish it.

What’s the lesson here? You need to find what motivates you and get excited about it! To get started, find one or two areas that interest you:

- Data Science / Machine learning

- Mobile Apps

- Websites

- Computer Science

- Games

- Data Processing and Analysis

- Hardware / Sensors / Robots

- Automating Work Tasks

Step 2: Learn the Basic Syntax, Quickly

I know, I know. I said we’d spend as little time as possible on syntax. Unfortunately, we can't avoid it entirely.

Here are some good resources to help you learn the Python basics without killing your motivation:

- Introduction to Python Programming — A beginner-friendly course that gets you coding quickly and helps you practice as you learn.

- Learn Python the Hard Way — A book that teaches Python concepts from the basics to more in-depth programs.

- The Python Tutorial — The tutorial on the main Python site.

I can’t emphasize this enough: Learn what syntax you can and move on. Ideally, you will spend a couple of weeks on this phase but no more than a month.

The sooner you can start working on projects, the faster you will learn. You can always refer back to the syntax later if necessary.

Quick note: Learn Python 3, not Python 2. Unfortunately, many “learn Python” resources online still teach Python 2. However, Python 2 is no longer supported, so bugs and security holes will not be fixed!

Step 3: Make Structured Projects

Once you’ve learned the basic Python syntax, start doing projects. Applying your knowledge right away will help you remember everything you’ve learned.

It’s better to begin with structured projects until you feel comfortable enough to create your own.

Here are some examples of actual Dataquest projects. Which one ignites your curiosity?

- Building a Word-Guessing Game — Have some fun, and create a functional and interactive word-guessing game using Python.

- Building a Food Ordering App — Create a functional and interactive food ordering application using Python.

- Data Cleaning and Visualization Star Wars-Style: Fans of Star Wars will not want to miss this structured project using real data from the movie.

- Predicting Car Prices: Use the machine learning workflow predict car prices.

- Predicting the Weather Using Machine Learning: Learn how to train a machine learning model for predicting the weather.

- Predicting Heart Disease: Build a k-nearest neighbors classifier to predict whether patients might be at risk of heart disease.

Inspiration for Structured Projects

Remember, there is no right place to start when it comes to structured projects. Let your motivations and goals guide you.

Are you interested in general data science or machine learning? Do you want to build something specific, like an app or website? Here are some recommended resources for inspiration, organized by category:

Data Science / Machine Learning

- Dataquest — Teaches you Python and data science interactively. You analyze a series of interesting datasets, ranging from CIA documents to NBA player stats. You eventually build complex algorithms, including neural networks and decision trees.

- Scikit-learn Documentation — Scikit-learn is the main Python machine learning library. It has some great documentation and tutorials.

- CS109 — This is a Harvard class that teaches Python for data science. They have some of their projects and other materials online.

Mobile Apps

- Kivy Guide — Kivy is a tool that lets you make mobile apps with Python. They have a guide for getting started.

Websites

- Bottle Tutorial — Bottle is another web framework for Python. Here’s a guide for getting started with it.

- How To Tango With Django — A guide to using Django, a complex Python web framework.

Games

- Pygame Tutorials — Here’s a list of tutorials for Pygame, a popular Python library for making games.

- Making Games with Pygame — A book that teaches you how to make games using Python.

Invent Your Own Computer Games with Python — A book that walks you through how to make several games using Python.

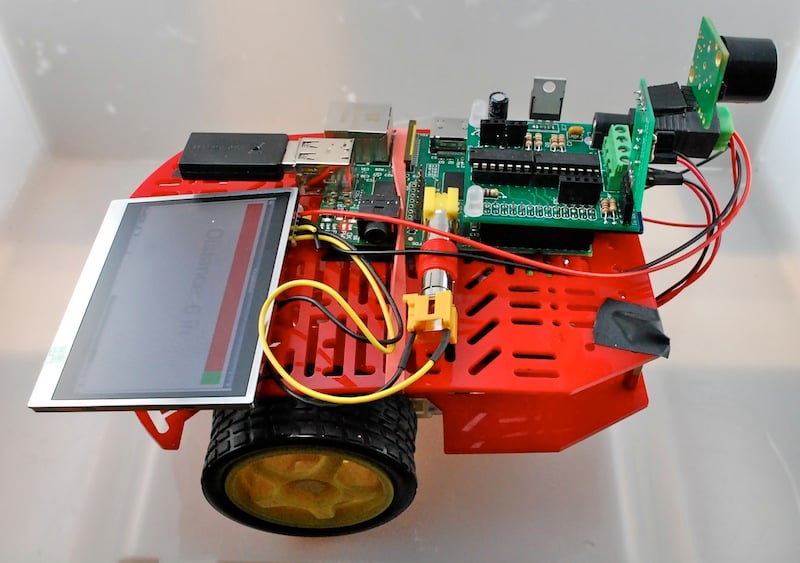

Hardware/Sensors/Robots

- Using Python with Arduino — Learn how to use Python to control sensors connected to an Arduino.

- Learning Python with Raspberry Pi — Build hardware projects using Python and a Raspberry Pi.

- Learning Robotics using Python — Learn how to build robots using Python.

- Raspberry Pi Cookbook — Learn how to build robots using the Raspberry Pi and Python.

Scripts to Automate Your Work

- Automate the Boring Stuff with Python — Learn how to automate day-to-day tasks using Python.

Projects are crucial. They stretch your capabilities, help you learn new Python concepts, and allow you to showcase your abilities to potential employers. Once you’ve done a few structured projects, you can move on to working on your own projects.

Step 4: Work on Python Projects on Your Own

After you’ve worked through a few structured projects, it’s time to kick things up a notch. Now, it's time to speed up learning by working on independent Python projects.

Here’s the key: Start with a small project. It's better to finish a small project than embark on a huge one that never gets completed.

8 Tips for Discovering Captivating Python Projects

I know it can feel daunting to find a good Python project to work on. Here are some tips to finding interesting projects:

- Extend the projects you were working on before and add more functionality.

- Check out our list of Python projects for beginners.

- Go to Python meetups in your area and find people working on interesting projects.

- Find open-source packages to contribute to.

- See if any local nonprofits are looking for volunteer developers.

- Find projects other people have made and see if you can extend or adapt them. Github is a good place to start.

- Browse through other people’s blog posts to find interesting project ideas.

- Think of tools that would make your everyday life easier. Then, build them.

17 Python Project Ideas

Need more inspiration? Here are some extra ideas to jumpstart your creativity:

Data Science/Machine Learning Project Ideas

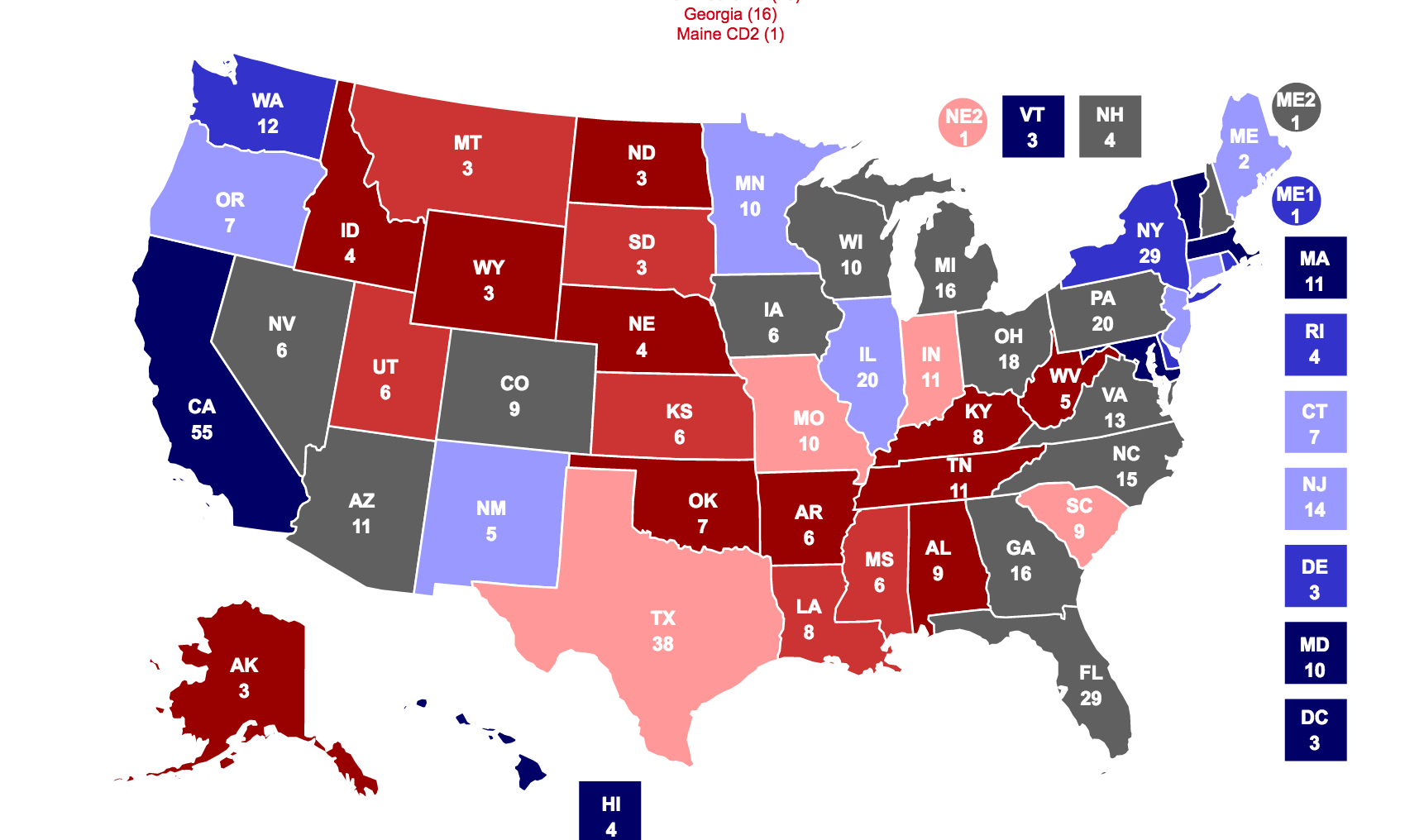

- A map that visualizes election polling by state

- An algorithm that predicts the local weather

- A tool that predicts the stock market

- An algorithm that automatically summarizes news articles

Mobile App Project Ideas

- An app to track how far you walk every day

- An app that sends you weather notifications

- A real-time, location-based chat

Website Project Ideas

- A site that helps you plan your weekly meals

- A site that allows users to review video games

- A note-taking platform

Python Game Project Ideas

- A location-based mobile game, in which you capture territory

- A game in which you solve puzzles through programming

Hardware/Sensors/Robots Project Ideas

- Sensors that monitor your house remotely

- A smarter alarm clock

- A self-driving robot that detects obstacles

Work Automation Project Ideas

- A script to automate data entry

- A tool to scrape data from the web

The key is to pick something and do it. If you get too hung up on finding the perfect project, you risk never starting one.

My first independent project consisted of adapting my automated essay-scoring algorithm from R to Python. It didn't look pretty, but it gave me a sense of accomplishment and started me on the road to building my skills.

Obstacles are inevitable. As you build your project, you will encounter problems and errors with your code. Here are some resources to help you.

Resources If You Get Stuck

Don’t let setbacks discourage you. Instead, check out these resources that can help:

- StackOverflow — A community question and answer site where people discuss programming issues. You can find Python-specific questions here.

- Google — The most commonly used tool of any experienced programmer. Very useful when trying to resolve errors. Here’s an example.

- Python Documentation — A good place to find reference material on Python.

Step 5: Keep Working on Harder Projects

As you find success with independent projects, keep increasing the difficulty and scope of your projects. Learning Python is a process, and you’ll need momentum to get through it.

Once you’re completely comfortable with what you’re building, it’s time to try something harder. Continue to find new projects that challenge your skills and push you to grow.

5 Prompts for Mastering Python

Here are some ideas for when that time comes:

- Try teaching a novice how to build one of your projects.

- Ask yourself: Can you scale your tool? Can it work with more data, or can it handle more traffic?

- Try making your program run faster.

- Imagine how you might make your tool useful for more people.

- Imagine how to commercialize what you’ve made.

Final Words

Remember, Python is continually evolving. There are only a few people in the world who can claim to understand Python completely. And these are the people who created it!

Where does that leave you? In a constant state of learning and working on new projects to hone your skills.

Six months from now, you’ll look back on your code and realize how terrible it is. At this point, you’ll know you’re on the right track.

If you thrive with minimal structure, then you have all you need to start your journey. However, if you need a little more guidance, our courses may help.

I founded Dataquest to help people learn quickly and avoid the things that make people quit. Our courses will have you writing actual code within minutes and completing real projects within hours.

If you want to learn Python to become a business analyst, data analyst, data engineer, or data scientist, we have career paths designed to take you from complete beginner to job-ready in months. Or, you can dip your toe in the water first and test-drive our introductory Python course here.

Common Python Questions (FAQs)

Is it hard to learn Python?

Learning Python can certainly be challenging. However, if you take the step-by-step approach I’ve outlined here, you’ll find that it’s much easier than you think.

Can you learn Python for free?

There are many free Python learning resources out there. At Dataquest, for example, we have dozens of free Python tutorials. You can also sign up for our interactive data science learning platform at no charge.

The downside to free resources is the lack of structure; you’ll need to patch together several free resources. This means you’ll spend extra time researching what you need to learn next and how to learn it. It may find that you've wasted time learning the wrong things or often get stuck because you lack the prerequisite knowledge to complete a project or tutorial.

Premium platforms may offer better teaching methods (like the interactive, in-browser coding Dataquest offers). They also save you time finding and building your own curriculum.

Can you learn Python from scratch (with no coding experience)?

Yes. Python is a great language for programming beginners because you don’t need prior experience with code to pick it up. Dataquest helps students with no coding experience go on to get jobs as data analysts, data scientists, and data engineers.

How long does it take to learn Python?

Learning a programming language is a bit like learning a spoken language — you’re never really done! That’s because languages evolve, so there’s always more to learn! Still, you can master writing simple but functional Python code pretty quickly.

How long will it take to get job-ready? That depends on your goals, the specific job you’re looking for, and how much time you can dedicate to study.

The Dataquest learners we surveyed reported reaching their learning goals in less than a year. Many did it in under six months, with no more than ten hours of weekly study.

How can I learn Python faster?

Find a platform that teaches Python (or build a curriculum for yourself) specifically for the skill you want to learn (e.g., Python for game dev or Python for data science).

That way, you’re not wasting time learning things extraneous to your day-to-day Python work.

Do you need a Python certification to find work?

Probably not. In data science, certificates don’t carry much weight. Employers care about skills, not paper credentials.

Translation? A GitHub full of great Python code is much more important than a certificate.

Should you learn Python 2 or 3?

Python 3, hands-down. A few years ago, this was still a topic of debate. Some extremists even claimed that Python 3 would “kill Python.” That hasn’t happened. Today, Python 3 is everywhere.

Is Python relevant outside of data science/machine learning?

Yes. Python is a popular and flexible language that’s used professionally in a wide variety of contexts.

We teach Python for data science and machine learning, but you can also apply your skills in other areas. Python is used in finance, web development, software engineering, game development, and more.

Having some data analysis skills with Python can also be useful for various other jobs. If you work with spreadsheets, for instance, there are likely things you could be doing faster and better with Python.

Where can you learn basic Python programming?

There are plenty of online Python courses, books, boot camps, and free guides online. We always recommend Dataquest, but we're a bit biased.

The Python basics include:

- Python lists

- Python loops

- Python strings

- Python functions

- Python arrays

- Python operators

- Python syntax

- Etc

Here at Dataquest, we teach the basics while expediting the time required to apply what you learned with a fun project. Try our introductory course here.

What types of jobs can you get knowing Python?

Many jobs can benefit from Python skills. Here are a few careers that you can break into if you know Python:

- Python Developer

- Data Analyst

- Data Scientist

- Data Engineer

- Business Analyst

- Machine Learning Engineer

- Software Engineer