The Power of Project-First Learning: A Deep Dive into Dataquest Projects

Unlock the potential of hands-on learning with ‘The Power of Project-First Learning: A Deep Dive into Dataquest Projects.’ Discover how this innovative approach not only enhances your understanding of data science but also equips you with the real-world skills needed to thrive in the industry. From choosing your path to impressing future employers, this blog guides you through a transformative journey that turns learning into tangible success. Start building your future today!

Why Projects Are Important?

Project-first learning isn’t a buzzword. It is an essential cog in the wheel of effective learning, specifically in the rapidly-evolving field of data science. But, why is learning through projects so crucial?

Project-first learning is an invaluable approach for mastering data science and AI skills. It creates an environment that closely mirrors real-world conditions and problem-solving scenarios. As data science and AI continue to revolutionize industries with an expected annual growth rate of 37.3% between 2023 and 2030[1], project-first learning will increasingly become a pivotal component of effective learning methodologies.

Practical Experience

In the real world, data doesn’t come in neat, clean packages. The critical skill of sifting through the chaotic mess of real-world data is rarely developed through traditional methods. Projects provide the chance to tackle raw, messy data, applying theoretical knowledge to real-life scenarios, a critical capability in data science and AI roles.

Staying Motivated

Projects can also be an excellent tool for maintaining motivation. Working towards a tangible end product creates a sense of accomplishment, which in turn increases your engagement and enthusiasm for learning. Furthermore, completed projects can demonstrate your abilities to potential employers in a vibrant job market projected to reach a staggering $407 billion by 2027[2].

Confidence Building

Lastly, the completion of each project aids in building your confidence. Confidence is not inherent; it can be learned and practiced. With each project, learners build confidence, fostering the courage to tackle more complex tasks.

Building a Data Project Portfolio

In the data-related job market, your resume or CV is just a snapshot of your skills and qualifications, not the whole picture. Stating that you have excellent communication abilities, can use various coding languages, and possess deep understanding of machine learning procedures is one aspect. However, offering tangible proof of these skills through your work achievements is another matter altogether. Using a portfolio to display your data projects is a beneficial way to validate your skills and display your accomplishments.

Creating a project portfolio is therefore an essential part of the job application process in data science. But what is a project portfolio? In the world of data science, a project portfolio is defined as a collection of projects that you present as evidence of your abilities during your job application. It is designed to provide tangible proof of your skills, showcasing your capacity for applying theoretical knowledge to practical situations. As such, a well-curated portfolio can offer a comprehensive picture of your problem-solving capabilities, data interpretation skills, and effectiveness in communication.

What is a Data Project Portfolio?

Your portfolio should consist of several projects that highlight your best work. For example, you might include a project where you used machine learning algorithms to predict customer behavior based on a dataset, or a project where you created an impressive data visualization to help a company understand its sales data. Another example could be a project that demonstrates your ability to clean and analyze a complex dataset, showcasing your data wrangling and statistical skills. These projects serve as a testament to your capacity to handle real-world problems and deliver valuable insights.

What are Employers looking for?

Employers value project portfolios highly. As an emerging field, data science focuses more on the work you have done rather than the number of years you have spent in the industry. In fact, without prior experience in the data science field, your project portfolio may be the determinant of whether you receive that crucial call back for an interview. This is because your projects substitute for work experience in your applications and prove to potential employers that you’re capable of performing the kind of data science work you’re applying for.

Creating an engaging narrative through your portfolio is essential in the field of data analytics, where facts and figures are utilized to weave a story. Hence, presenting your work creatively and effectively is just as crucial as the content you’re sharing. A solid portfolio not only increases your chances of landing a job but also places you at an advantage in a competitive job market.

The first step in making a high-quality portfolio is to know what skills to demonstrate. The primary skills that companies want in data scientists, and thus the primary skills they want a portfolio to demonstrate, are:

- Ability to communicate

- Ability to collaborate with others

- Technical competence

- Ability to reason about data

- Motivation and ability to take initiative

Any good portfolio will be composed of multiple projects, each of which may demonstrate 1-2 of the above points. If you want to learn more about what skills employers want to see a candidate demonstrate, here’s a good blog on what those skills look like and how to build a portfolio that demonstrates all of those skills effectively.

Start your first project

Time to start your project! Be mindful that your project helps meet the criteria in the section above and reflects your passions, expertise, and capabilities.

Understanding the Data Science Workflow

Before we start, it’s essential to understand the data science workflow. It’s like a roadmap guiding us through the project. The workflow includes several stages:

- Data Collection: This is where we gather our raw materials, the data. It could be from various sources like databases, APIs, web scraping, or online repositories. For example, if you’re interested in social media analysis, you might collect data from Twitter using its API.

- Feature Extraction and Exploratory Data Analysis (EDA): Here, we transform raw data into a format that can be analyzed (feature extraction) and then explore it to understand its characteristics (EDA). For instance, if you’re working with text data, feature extraction might involve converting the text into numerical vectors. EDA could involve finding the most common words or the sentiment of the text.

- Model Selection and Validation: In this stage, we choose a suitable machine learning model and validate its performance using techniques like cross-validation. For example, if you’re working on a classification problem, you might choose a logistic regression model and validate it using k-fold cross-validation.

- Model Deployment, Continuous Monitoring, and Improvement: Once we’re satisfied with our model, we deploy it. But our job doesn’t end there. We continuously monitor the model’s performance and make improvements as needed. For instance, if your model is a recommendation system for a website, you would monitor how users interact with the recommendations and update the model based on this feedback.

Dataquest simplifies this learning process with its unique teaching approach, making it easy for beginners to understand and follow the data science workflow. Its outcome-based learning platform simulates the real-world challenges that data professionals face every day, thereby providing contextual learning. The platform’s ‘Backwards Curriculum Design’ ensures that each course is created with the end in mind. By working with industry professionals, Dataquest determines real-world scenarios and builds learning objectives based on these scenarios. This way, learners are equipped with the skills required to complete projects successfully, proving their ability to apply these skills in a job setting.

Choose a Project or Pick a Topic

Pick projects that resonate with you. Remember, data science is broad and it’s impossible to be an expert in everything. The projects you select should allow you to delve into different facets of data science and progressively grow your skillset. Beginners can start with projects such as House Prices Regression or Titanic Classification. It’s advisable to complete multiple projects, each focusing on different aspects of data science like data collection, data cleaning, data exploration, data visualization, regression, statistics, and machine learning. Opt for projects that mimic real-world situations. For example, web scraping reviews from a food delivery website or scraping an online course website can provide invaluable hands-on experience. These kinds of projects not only help you understand how to collect and clean data but also expose you to the practical applications of data science.

Here are our top four beginner-friendly projects you can start with:

- Build a Word Guessing Game

- Build A Food Ordering App

- Time-Series Forecasting on the S&P 500

- Predicting The Weather Using Machine Learning

Or you can choose from hundreds of guided projects on the Dataquest learning platform

Build a Word Guessing Game

Build A Food Ordering App

Time-Series Forecasting on the S&P 500

Predicting The Weather Using Machine Learning

Or pick a topic that you’re interested in and motivated to explore. It’s very obvious when people are making projects just to make them, and when people are making projects because they’re genuinely interested in exploring the data. It’s worth spending extra time on this step, so ensure that you find something you’re actually interested in. A good way to find a topic is to browse different datasets and seeing what looks interesting. Here are some good sites to start with:

• Data.gov — contains government data.

• /r/datasets — a subreddit that has hundreds of interesting datasets.

• Awesome datasets — a list of datasets, hosted on Github.

• 17 places to find datasets — a blog post with 17 data sources, and example datasets from each.

In real-world data science, you often won’t find a nice single dataset that you can browse. You might have to aggregate disparate data sources, or do a good amount of data cleaning. If a topic is very interesting to you, it’s worth doing the same here, so you can show off your skills better.

Find a Dataset

Now that you’ve chosen a project, you need a dataset. There are many resources available online where you can find datasets. Some of our favorites are Kaggle, UCI Machine Learning Repository, and Google Dataset Search. Choose a dataset that aligns with your project goals. Ensure the data comes from reliable sources to maintain the credibility of your project. If you need a little help finding free datasets, here’s a good place to start.

Clean the Data

Once you’ve found your dataset, it’s time to roll up your sleeves and clean it. This step involves removing any errors or inconsistencies in the data. It might seem tedious, but remember, a clean dataset is crucial to the success of your project. For instance, you might need to handle missing values, remove duplicates, or correct inconsistent entries.

Explore the Data

Now comes the fun part – exploring the data! This involves looking at the data from different angles, visualizing it, and trying to find patterns or insights. It’s like being a detective, where your clues are hidden in the data. For example, for a house price prediction project, you might plot the distribution of prices, investigate the relationship between the number of rooms and price, or identify neighborhoods with the highest average prices.

The important thing is to stick to a single angle. Trying to focus on too many things at once will make it hard to make an effective project. It’s also important to pick an angle that has sufficient nuance. Here are examples of angles without much nuance:

- Figuring out which banks sold loans to Fannie Mae that were foreclosed on the most.

- Figuring out trends in borrower credit scores.

- Exploring which types of homes are foreclosed on most often.

- Exploring the relationship between loan amounts and foreclosure sale prices

All of the above angles are interesting and would be great if we were focused on storytelling, but aren’t great fits for an operational project.

Communicate Your Results

Once you’ve built your model and made some predictions, it’s time to share your findings. This could involve creating a report or presentation, or even writing a blog post about your project. Remember, communication is a key skill in data science. It’s not enough to build a great model; you also need to explain it to others.

Let’s consider a beginner data science project, such as analyzing a dataset of Titanic passengers to predict survival. After you’ve built your model and made some predictions, you might create a presentation to share your findings.

In your presentation, you could start by explaining the problem you were trying to solve and the data you used. Then, you could discuss the exploratory data analysis you performed, the insights you gained, and the model you chose.

Next, you could present the results of your model. This could include the accuracy of your model, the most important features for predicting survival, and any interesting patterns or trends you discovered. For example, you might find that passenger class and gender were important factors in survival, which could lead to a discussion about the social dynamics of the time.

Finally, don’t forget to discuss any challenges you faced during the project and how you overcame them, as well as any future improvements you would like to make to your model. This shows that you can reflect on your work and are always looking to improve, which are important qualities in a data scientist.

Remember, the goal is not just to present your results, but to tell a story with your data that engages your audience and demonstrates your understanding of the project.

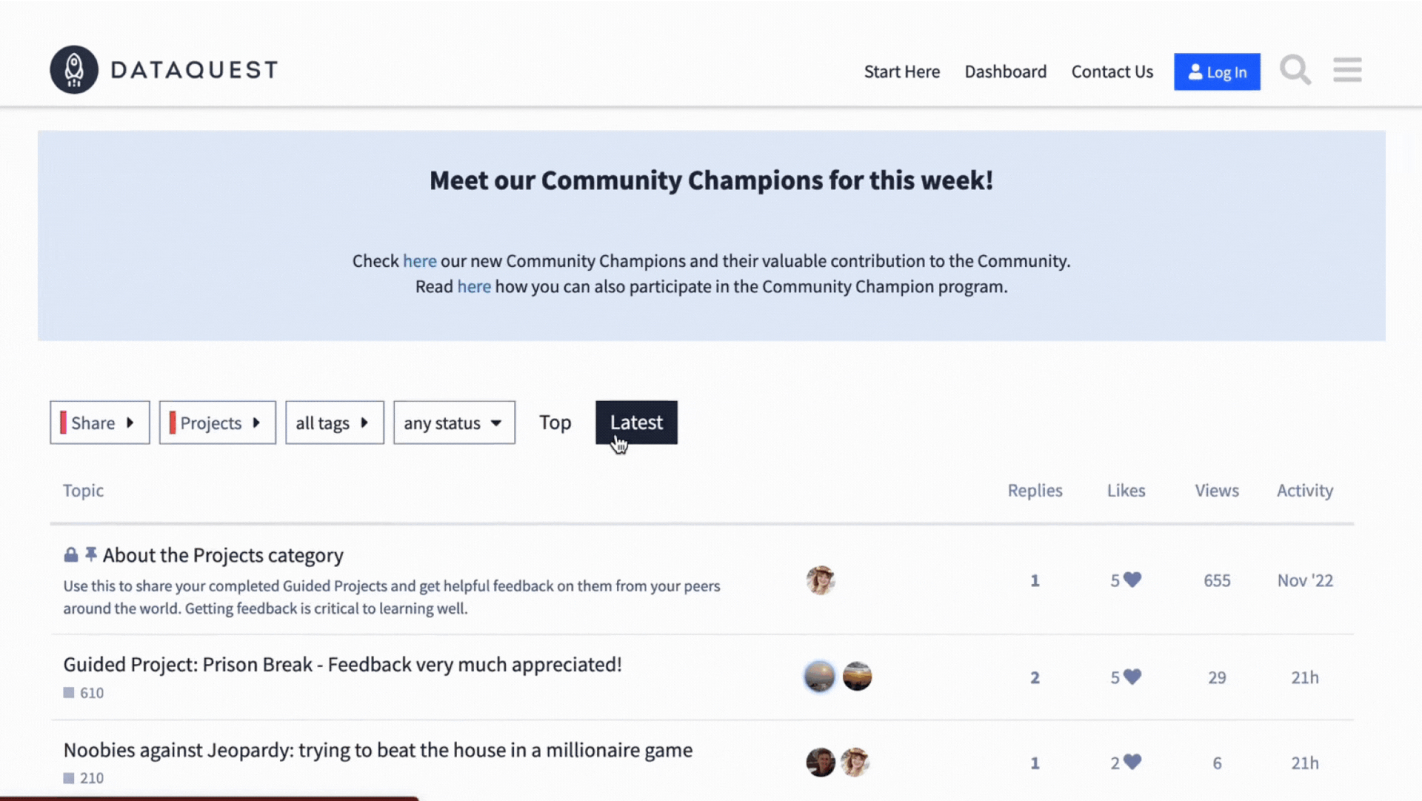

Get Feedback

After you’ve communicated your results, don’t forget to get feedback. This can help you improve your skills and make your future projects even better. Don’t be afraid of criticism. Instead, view it as a learning opportunity. You could ask your peers or mentors to review your project and provide feedback.

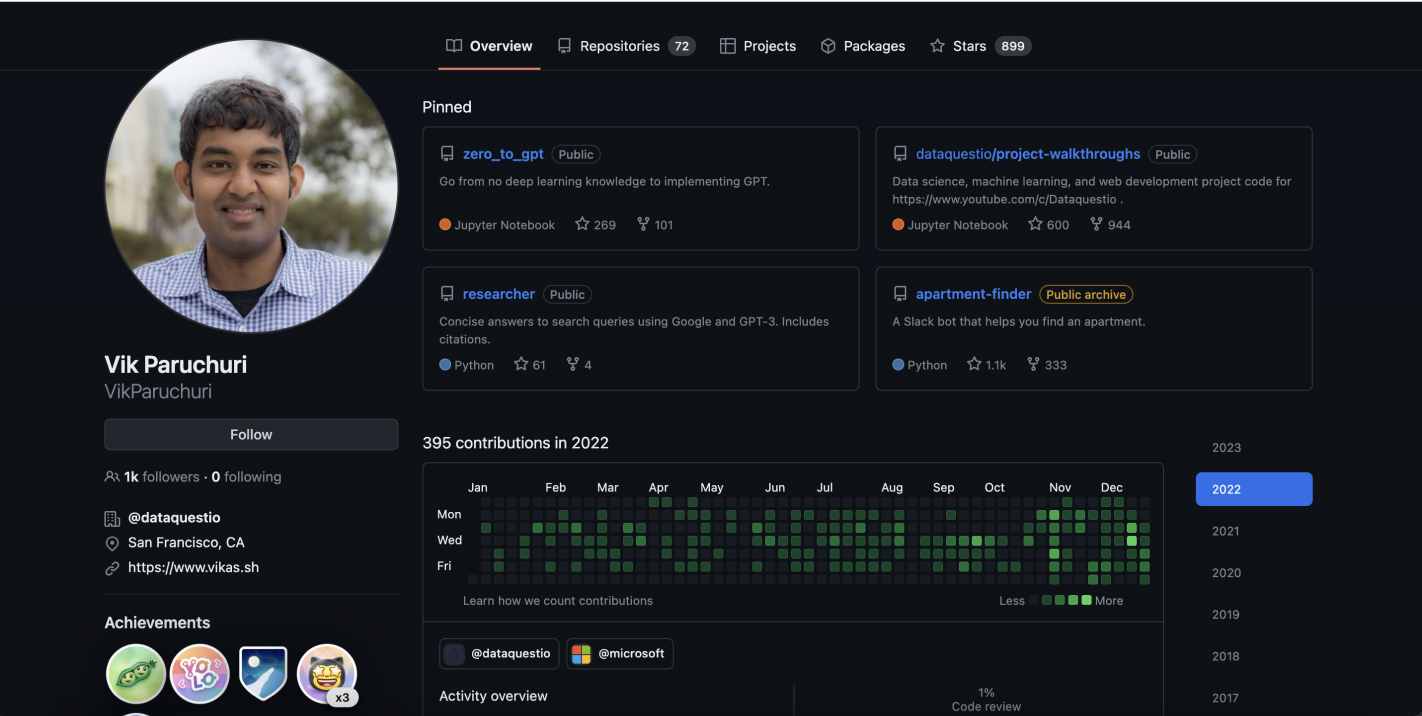

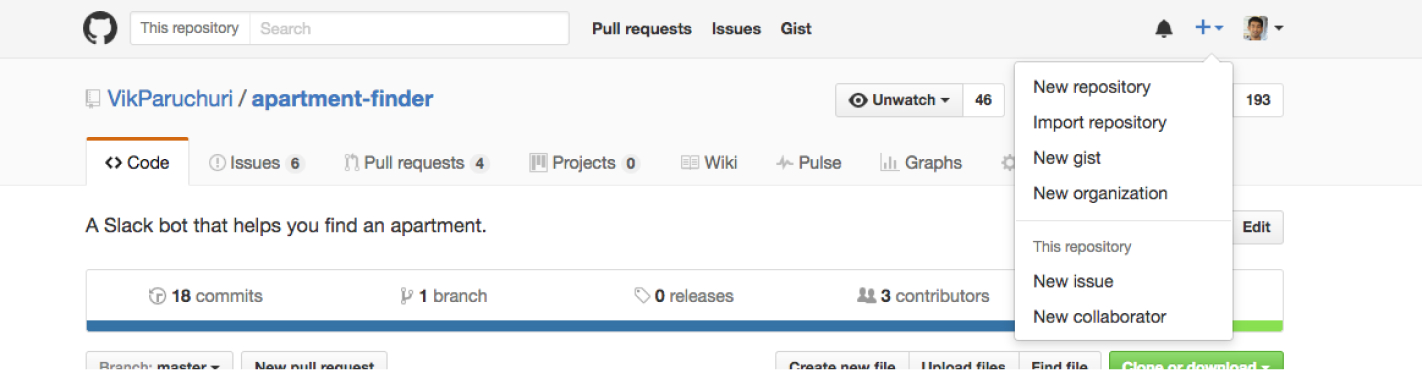

Upload Your Work on GitHub

Finally, showcase your hard work on GitHub. It’s a great platform to host your code, data files, models, and notebooks. This allows potential employers to see your code quality, organization skills, and style of documentation. For the house price prediction project, you might create a GitHub repository containing your code, the cleaned dataset, any visualizations you created, and a readme file explaining your project.

Link your GitHub repositories to your LinkedIn profile, resume, or personal website, which can help attract the attention of recruiters. Here’s a more detailed tutorial on how to upload and present your projects on GitHub.

Get Inspired: Projects in Our Community

In an ever-evolving field like data science, inspiration can come from numerous sources. What better source than our very own community, a thriving hub of learners and innovators who are pushing the boundaries of their knowledge every day. To make this journey less daunting for our newcomers and simultaneously shine a spotlight on some of the fantastic work being carried out, we’ve chosen a handful of noteworthy projects to highlight in this editorial.

Firstly, let’s talk about Joshua’s project on the “Oldest Businesses in the World“. Combining data merging and visualization skills with plotly express, Joshua has created a well-structured, comprehensive project that provides insightful business narratives through intuitive charts. This project is an excellent study for beginners interested in data visualization and storytelling using data.

Next, we have a remarkable project from Filipe on “Heavy Traffic Indicators on I-94“. The project stands out for its well-organized structure, clear goals, limitations, methodology, and astonishing plots, especially the map of the USA Census regions. This work should be a must-read for beginners who want to learn how to structure a data science project and communicate their results effectively.

Charles presents a perfect example of an end-to-end machine learning project with his work on “Classifying Heart Disease“. Charles takes us through each step of data preprocessing, modeling, and model tuning, explaining and illustrating each part with compelling charts. The outcome is a highly accurate model, underpinned by interesting insights and clear recommendations on how to overcome potential limitations.

Lastly, let’s shine a spotlight on Gaylord and his open-sourced “Rabet Africa Data Project”. This fully interactive website features insightful graphs for COVID-19 cases, vaccinations, forest cover, cereal production, and more for various countries in Africa. It’s a brilliant example of how data science can be used to bring about awareness and facilitate decision-making in real-world scenarios.

These projects are prime examples of the transformative power of data science when applied with creativity, passion, and persistence. We hope that by sharing these stories, we can inspire more learners to take that first step or to continue their journey, in data science. Remember, every data scientist, regardless of their current proficiency, was once a beginner. Don’t let imposter syndrome deter you; instead, let it be a motivator. Learn, create, and most importantly, share your work with the community. There’s always someone who could benefit from your unique perspective.