How to Install and Configure Docker Swarm on Ubuntu

- Provides Docker Engine CLI to create a swarm of Docker Engines where you can deploy application services. You don't need any additional software tool to create or manage a swarm.

- Swarm manager assigns a unique DNS name to each service in the swarm. So you can easily query every container running in the swarm through a DNS name.

- Each node in the swarm enforces TLS mutual authentication and encryption to secure communications between itself and all other nodes. You can also use self-signed root certificates or certificates from a custom root CA.

- Allows you to apply service updates to nodes incrementally.

- Allows you to specify an overlay network for your services and how to distribute service containers between nodes.

In this post, we will go through how to install and configure Docker Swarm mode on an Ubuntu 16.04 server. We will:

- Install one of the service discovery tools and run the swarm container on all nodes.

- Install Docker and configure the swarm manager.

- Add all the nodes to the Manager node (more on nodes in the next section).

To get the most out of this post, you should have:

- Basic knowledge of Ubuntu and Docker for data science.

- Two nodes with ubuntu 16.04 installed.

- A non-root user with sudo privileges setup on both nodes.

- A static IP address configured on Manager Node and on Worker Node. Here, we will use IP 192.168.0.103 for Manager Node and IP 192.168.0.104 for Worker Node.

Let's start by looking at nodes.

Manager Nodes and Worker Nodes

Docker Swarm is made up of two main components:

- Manager Nodes

- Worker Nodes

Manager Nodes Manager nodes are used to handle cluster management tasks such as maintaining cluster state, scheduling services, and serving swarm mode HTTP API endpoints. One of the most important features of Docker in Swarm Mode is the manager quorum. The manager quorum stores information about the cluster, and the consistency of information is achieved through consensus via the Raft consensus algorithm. If any Manager node dies unexpectedly, other one can pick up the tasks and restore the services to a stable state. Raft tolerates up to (N-1)/2 failures and requires a majority or quorum of (N/2)+1 members to agree on values proposed to the cluster. This means that in a cluster of 5 Managers running Raft, if 3 nodes are unavailable, the system cannot process any more requests to schedule additional tasks. The existing tasks keep running but the scheduler cannot rebalance tasks to cope with failures if the manager set is not healthy. Worker Nodes Worker nodes are used to execute containers. Worker nodes don’t participate in the Raft distributed state and don't make scheduling decisions. You can create a swarm of one Manager node, but you cannot have a Worker node without at least one Manager node. You can also promote a worker node to be a Manager when you take a Manager node offline for maintenance. As mentioned in the introduction, we use two nodes in this post — one will act as a Manager node and other as a Worker node.

Getting Started

Before starting, you should update your system repository with the latest version. You can update it with the following command:

sudo apt-get update -y && sudo apt-get upgrade -y Once the repository is updated, restart the system to apply all the updates.

Install Docker

You will also need to install the Docker engine on both nodes. By default, Docker is not available in the Ubuntu 16.04 repository. So you will need to set up a Docker repository first. You can start by installing the required packages with the following command:

sudo apt-get install apt-transport-https software-properties-common ca-certificates -y Next, add the GPG key for Docker: wget https://download.docker.com/linux/ubuntu/gpg && sudo apt-key add gpg Then, add the Docker repository and update the package cache: sudo echo "deb [arch=amd64] https://download.docker.com/linux/ubuntu xenial stable" >> /etc/apt/sources.list sudo apt-get update -y Finally, install the Docker engine using the following command: sudo apt-get install docker-ce -y Once the Docker is installed, start the Docker service and enable it to start on boot time: sudo systemctl start docker && sudo systemctl enable docker By default, Docker daemon always runs as the root user and other users can only access it using sudo. If you want to run docker command without using sudo, then create a Unix group called docker and add users to it. You can do this by running the following command: sudo groupadd docker && sudo usermod -aG docker dockeruser Next, log out and log back to your system with dockeruser so that your group membership is re-evaluated. Note: Remember to run the above commands on both nodes.

Configure firewall

You will need to configure firewall rules for a swarm cluster to work properly on both nodes. Allow the ports 7946, 4789, 2376, 2376, 2377, and 80 using the UFW firewall with the following command:

sudo ufw allow 2376/tcp && sudo ufw allow 7946/udp && sudo ufw allow 7946/tcp && sudo ufw allow 80/tcp && sudo ufw allow 2377/tcp && sudo ufw allow 4789/udp Next, reload the UFW firewall and enable it to start on boot: sudo ufw reload && sudo ufw enable Restart the Docker service to affect the Docker rules: sudo systemctl restart docker

Create Docker Swarm cluster

First, you will need to initialize the cluster with the IP address, so your node acts as a Manager node. On the Manager Node, run the following command for advertising IP address:

docker swarm init --advertise-addr 192.168.0.103 You should see the following output:

Swarm initialized: current node (iwjtf6u951g7rpx6ugkty3ksa) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-5p5f6p6tv1cmjzq9ntx3zmck9kpgt355qq0uaqoj2ple629dl4-5880qso8jio78djpx5mzbqcfu 192.168.0.103:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.The token shown in the above output will be used to add worker nodes to the cluster in next step. The Docker Engine joins the swarm depending on the join-token you provide to the docker swarm join command. The node only uses the token at join time. If you subsequently rotate the token, it doesn’t affect existing swarm nodes. Now, check the status of the Manager Node with the following command: docker node ls If everything is fine, you should see the following output:

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

iwjtf6u951g7rpx6ugkty3ksa * Manager-Node Ready Active LeaderYou can also check the status of the Docker Swarm Cluster: code>docker info

You should see the following output:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 17.09.0-ce

Storage Driver: overlay2

Backing Filesystem: extfs

Supports d_type: true

Native Overlay Diff: true

Logging Driver: json-file

Cgroup Driver: cgroupfs

Plugins:

Volume: local

Network: bridge host macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file logentries splunk syslog

Swarm: active

NodeID: iwjtf6u951g7rpx6ugkty3ksa

Is Manager: true

ClusterID: fo24c1dvp7ent771rhrjhplnu

Managers: 1

Nodes: 1

Orchestration:

Task History Retention Limit: 5

Raft:

Snapshot Interval: 10000

Number of Old Snapshots to Retain: 0

Heartbeat Tick: 1

Election Tick: 3

Dispatcher:

Heartbeat Period: 5 seconds

CA Configuration:

Expiry Duration: 3 months

Force Rotate: 0

Autolock Managers: false

Root Rotation In Progress: false

Node Address: 192.168.0.103

Manager Addresses:

192.168.0.103:2377

Runtimes: runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 06b9cb35161009dcb7123345749fef02f7cea8e0

runc version: 3f2f8b84a77f73d38244dd690525642a72156c64

init version: 949e6fa

Security Options:

apparmor

seccomp

Profile: default

Kernel Version: 4.4.0-45-generic

Operating System: Ubuntu 16.04.1 LTS

OSType: linux

Architecture: x86_64

CPUs: 1

Total Memory: 992.5MiB

Name: Manager-Node

ID: R5H4:JL3F:OXVI:NLNY:76MV:5FJU:XMVM:SCJG:VIL5:ISG4:YSDZ:KUV4

Docker Root Dir: /var/lib/docker

Debug Mode (client): false

Debug Mode (server): false

Registry: https://index.docker.io/

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

Add Worker Node to swarm cluster

Manager Node is now configured properly, it's time to add Worker Node to the Swarm Cluster. First, copy the output of the "swarm init" command from the previous step, then paste that output on the Worker Node to join the Swarm Cluster:

docker swarm join --token SWMTKN-1-5p5f6p6tv1cmjzq9ntx3zmck9kpgt355qq0uaqoj2ple629dl4-5880qso8jio78djpx5mzbqcfu 192.168.0.103:2377

You should see the following output:

This node joined a swarm as a worker.Now, on the Manager Node, run the following command to list the Worker Node: docker node ls

You should see the Worker Node in the following output:

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

iwjtf6u951g7rpx6ugkty3ksa * Manager-Node Ready Active Leader

snrfyhi8pcleagnbs08g6nnmp Worker-Node Ready Active Launch web service in Docker Swarm

Docker Swarm Cluster is now up and running, it's time to launch the web service inside Docker Swarm Mode. On the Manager Node, run the following command to deploy a web server service: docker service create --name webserver -p 80:80 httpd

The above command will create an Apache web server container and map it to port 80, so you can access Apache web server from the remote system. Now, you can check the running service with the following command: docker service ls You should see the following output:

ID NAME

MODE REPLICAS IMAGE PORTS

nnt7i1lipo0h webserver replicated 0/1 apache:latest *:80->80/tcpNext, scale the web server service across two containers with the following command: docker service scale webserver=2

Then, check the status of web server service with the following command: docker service ps webserver You should see the following output:

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

7roily9zpjvq webserver.1 httpd:latest Worker-Node Running Preparing about a minute ago

r7nzo325cu73 webserver.2 httpd:latest Manager-Node Running Preparing 58 seconds ago Test Docker Swarm

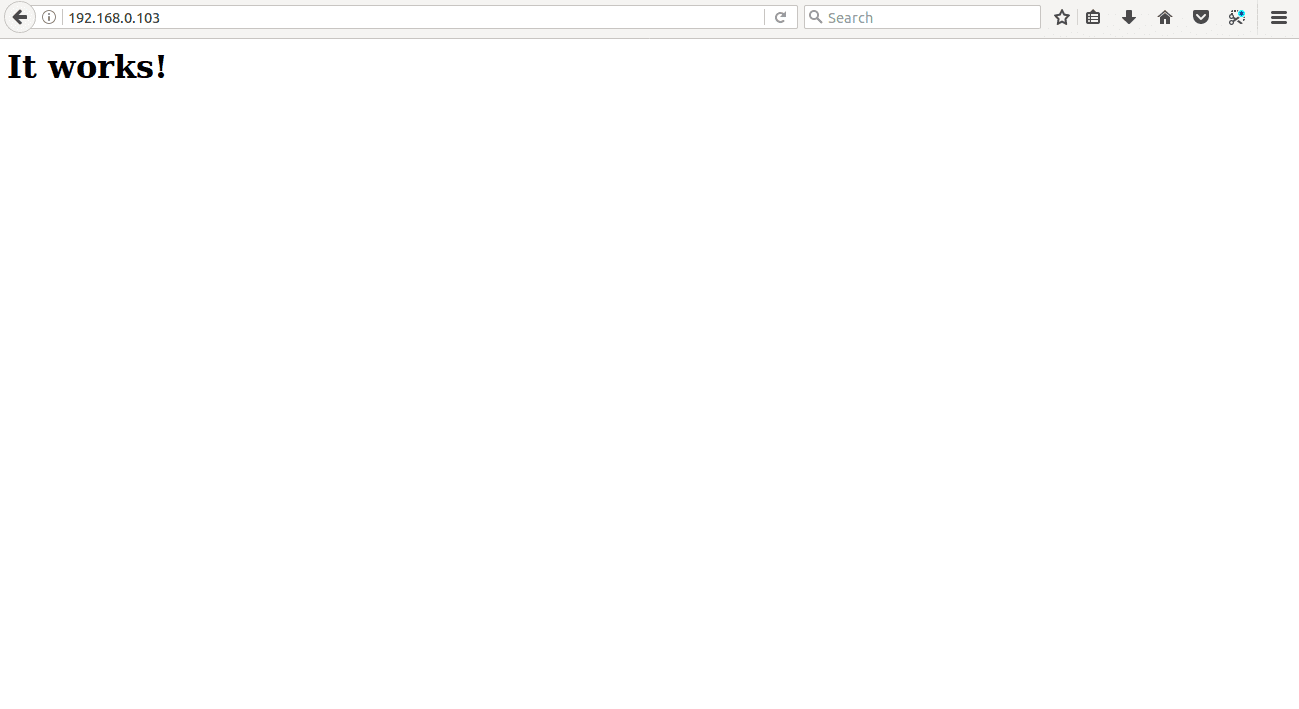

Apache web server is now running on Manager Node. You can now access web server by pointing your web browser to the Manager Node IP

or Worker Node IP as shown below:  Apache web server service is now distributed across both nodes. Docker Swarm also provides high availability for your service. If the web server goes down on the Worker Node, then the new container will be launched on the Manager Node. To test high availability, just stop the Docker service on the Worker Node:

Apache web server service is now distributed across both nodes. Docker Swarm also provides high availability for your service. If the web server goes down on the Worker Node, then the new container will be launched on the Manager Node. To test high availability, just stop the Docker service on the Worker Node: sudo systemctl stop docker On the Manager Node, run the web server service status with the following command: docker service ps webserver You should see that a new container is launched on Manager Node:

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

ia2qc8a5f5n4 webserver.1 httpd:latest Manager-Node Ready Ready 1 second ago

7roily9zpjvq \_ webserver.1 httpd:latest Worker-Node Shutdown Running 15 seconds ago r7nzo325cu73 webserver.2 httpd:latest Manager-Node Running Running 23 minutes ago Conclusion

Congratulations! you have successfully installed and configured a Docker Swarm cluster on Ubuntu 16.04. You can now easily scale your application up to a thousand nodes and fifty thousand containers with no performance degradation. Now that you have a basic cluster set up, head over to the Docker Swarm documentation to learn more about Swarm. You'll want to look into configuring your cluster with more than just one Manager Node, according to your organization's high-availability requirements.