How I Scraped Over 25,000 Forum Posts In 3 Steps

Motivation

The Dataquest Community is evolving. In the past months, I’ve been watching the growth of active users, new topics, and areas of discussion. Over the past six months, the platform has made a great contribution to the development of various tags to facilitate the filtering and search for relevant information.

But let’s try to create a list of tags directly from our posts and messages. Maybe they will be better than what the Dataquest team offered us, maybe not. But I think it will definitely be a lot of fun

I plan to write several articles along the way of this study. This is the first of them. It focuses on the reasoning behind what we should end up with, what data we can use, and where to get it. As well as the data collection itself.

Plan

What we need to get this done:

- Data

- Approach to modeling

- Beer (Do we really need anything else then?)

- Profit

But let’s go over our complicated plan in more detail.

1. Data

Based on what data could we build our tags?

- Topic titles

- The text of the topics

- Text of posts in the topic

- Combination of items 1-3

Topic titles - this should give us the cleanest results. Because, as the community has evolved, people have started to create cleaner headlines. Understanding that this is what we see first, so the more headlines we have to identify the problem, the more we are likely to get it solved.

The text of the topics - this gives more information to the reader, but it also increases the amount of noise. English, unlike Russian, has a stricter structure and fewer syntactic modifications. Nevertheless, many of us are not native speakers, there are individual writing styles, the usage of rare words. But the main problem is, the text usually contains the code. This can be useful (e.g. we could create a tag for a library based on how often we saw the ‘import lib’ phrase) - and this can be absolutely useless as well: think variables, basic functions, libraries, etc.

Text of posts in the topic - sometimes the posts in a topic are much more useful than the topic itself. They give a solution to a problem or an alternative view of it (perhaps your alternative view on this article is “What the heck is he talking about?” or “Wow, I can’t get enough of it!”). But many posts are useless as they may be words of encouragement. They can be reasoning or clarifications that go far off-topic. Those are important to us as humans, but are they necessary for tags?

So, I plan to do all my experiments with 3 kinds of data.

- Titles only

- Titles + topic text

- Titles + topic text, posts in topics.

In stage 3, we’ll merge the topic data into one document, but we’ll treat the posts as separate documents.

But where do we get the data?

I think if I had approached the Dataquest team I could have gotten a slice of the database from them to do my research. But the real heroes always go the other way around, so as a web scraping expert (many of you know me as you-don’t-need-Selenium-for-this-task guy), I’m going to write a crawler for Dataquest. Apparently, it’s time to show my skills!

2. Modeling Approach

We have the data. So how do we get the tags out of it? I believe this is a natural language processing problem called topic modeling. But this is a special case where we need to reduce discussion types solely to their keywords. That’s great, but which of the many topic modeling approaches should we use? LSA, LDA, deep learning?

How about we try everything I can find (and you can read them here), and compare the outcomes at the end? Or maybe you could take part in the research too, and it would take a completely different path from what I’m imagining right now. Well, I’m thinking of trying everything, sounds great, sounds ambitious.

3. Profit

And what kind of outcomes are we supposed to get? Fun is good, but you can’t buy beer with it. So we’re going to go for the tangibles.

We’ll deal with the different approaches to the topic of modeling using an easier case as an example. We will see which approaches work better. If we see that we can make a good tool out of it, we will finish this series with an application and a deployment.

So, how many tags do we want to get? Let’s say 100, but they’ve got to be awesome! Or not. Let’s get started.

Collecting Data

Scanning strategy

After doing some research on the site - https://community.dataquest.io/ I’ve come up with the following plan.

To collect data, we actually need three steps:

- Scroll through https://community.dataquest.io/latest from beginning to end until we have collected links to the topics.

- Go to each topic to get the body of the post.

- Inside each topic, scroll through all the posts to collect each of them because we have monsters like https://community.dataquest.io/t/welcome-to-our-community/236/ with over 900 posts.

Perhaps someone is already wondering how I’m going to render js without using selenium - or have I maybe decided that in this case, Selenium makes sense?

No (suprise, surprise!). You don’t need to use Selenium for this task.

This is how are we going to get the data:

- To scroll through the catalog of topics we use - https://community.dataquest.io/latest.json?ascending=false&no_definitions=true&page=0 As you can see, it is enough to change the page parameter to ‘turn pages’. We will do it by checking whether or not we have more_topics_url parameter on the current page. If there is no such parameter then we have reached the last page.

- It’s a little more complicated than that. We have a link with the template python f"https://community.dataquest.io/t/{post_id}/posts.json?{posts_ids}&include_suggested=true"Which returns the body of the topic and the posts in it. We were able to get the id of the topic post_id in the last step. But where can we get the list of posts_ids with the ids of the messages in it? To do that, we have to go directly to the topic’s HTML page and get the id of all posts linked to it.

Aren’t you surprised that they are just stored in the topic’s page body? Oh yes, Discourse is a very redundant system that transmits much more data than necessary. But this is the reality of the 21st century. The developer’s time is more valuable than your resources. Once we have this data we can freely access any message.

Here is an example: https://community.dataquest.io/t/236/posts.json?post_ids[]=34390&post_ids[]=34400&post_ids[]=34447&post_ids[]=34533&post_ids[]=34639&post_ids[]=34668&post_ids[]=34746&post_ids[]=34794&post_ids[]=34850&post_ids[]=34910&post_ids[]=34920&post_ids[]=34932&post_ids[]=34964&post_ids[]=34965&post_ids[]=35083&post_ids[]=35214&post_ids[]=35215&post_ids[]=35306&post_ids[]=35309&post_ids[]=35348&include_suggested=true

Choice of technologies

So how do we do it?

Single-threading sounds as sad as Selenium.

Multithreading? I’m good at it, and then we either need to know all the links we’re going to process in advance or use queues and synchronization. Honestly, there’s nothing difficult about this approach. If you have bad Internet you need about 5 minutes to figure out that there are a total of 261 pages listing all the threads.

Given that, you can separate the 261 pages to multiple threads, get all the necessary links, then also separate these a-little-over 7,800 articles to multithreads. After that, you can either collect all the messages as well or pass the data within the queues.

But you can read 100,500 articles about it too. Let’s use asyncio then. Asynchronous Python for input and output operations is the trend of the last few years. And even though we’re not building a web application, it works for us, too.

The bottom line is that our web request library is going to be aiohttp. There are other libraries for web requests, but I think you have to have good control over your web flow, and aiohttp has a very well-documented codebase, so it’s good for us. Also, we’ll need to bear in mind that almost all web request libraries in Python try to support the API structure in requests.

I’m going to use MongoDB to store data. Firstly, because it’s undeservedly overlooked in the community. Secondly, to be honest, it’s less time-consuming to store data than with SQL databases.

Well, we finally get to the code.

A simple class for working with the database:

class MongoManage:

client = client

def __init__(self):

connect =self.client[MONGODB_SETTINGS.get('db', 'dataquest')]

self.topic_collection = connect.topic_collection

self.posts_collection = connect.posts_collection

async def get_data(self, collection, filter_query, filter_fields):

current_filter_fields = {"_id": 0}

current_filter_fields |= filter_fields

cursor = collection.find(filter_query, current_filter_fields)

return (doc async for doc in cursor)

async def write_data(self, collection, data):

if isinstance(data, list):

collection.insert_many(data)

else:

collection.insert_one(data)

async def get_topics(self, *args):

return await self.get_data(self.topic_collection, *args)

async def write_topics(self, *args):

await self.write_data(self.topic_collection, *args)

async def get_posts(self, *args):

return await self.get_data(self.posts_collection, *args)

async def write_posts(self, *args):

await self.write_data(self.posts_collection, *args)

async def close(self):

self.client.close()Since I will only have 2 collections of data, I’ve come up with a simple method with functions to work with these collections. In fact, in all the code you’ll see below, I only need to call the get and write functions. If I see that I make certain queries more often than others, I can also include them in this class. I think this is convenient enough.

You will notice that async / await is used here.

Since I will be creating an asynchronous approach to collecting data, I don’t want my code to get blocked when working with the database. Yes, it is true that your main problem when working with asyncio is that you have to understand which operations can block your code. In addition to that, you also need to find asynchronous libraries and drivers. For MongoDB this is Motor.

Coding

What you did NOT want to know about Asyncio in web scraping

If you are parsing data from asyncio, the most important rule you should follow is REQUEST CONTROL.

Asyncio actually spawns sockets that handle your requests. That means, if you create 500 requests to one site without using await on the request function, you will open 500 sockets accessing one site. You will create 500 requests. Have you heard of DDoS attacks? Congratulations, you’ve started your journey in that direction.

Example:

for _ in range(500):

asyncio.create_task(asyncio_requests()) # There is no await, you have just created 500 requests for the siteYou could also do this

for _ in range(500):

await asyncio.create_task(asyncio_requests()) # There is await here you make 1 request. And you wait for it to finishYou just made your code synchronous. Then why bother using asyncio at all?

This is a joke, of course, but it’s also a 100

In asyncio, it is very easy to create a situation where you generate cascade queries.

One page of topics has 30 topics. We get a total of 31 requests: for 30 topics + for the next page of topics. The site may not have time to respond to all the requests and you will end up creating another 31. Thus you will generate a growing load on the server site.

We need to remember that all resources are limited to their own extent. One site may handle no more than 100 parallel requests, the other one can handle a thousand. But you should understand that the load you create interferes with other users, or makes the server scale, and this costs money. This is why some put anti-scraping protection in place. I won’t mention any other workarounds for these protections other than using a proxy.

So, I have outlined the problem. In the Dataquest situation, you will get a notification that you are creating too many requests.

How to solve this problem in asyncio? Use Semaphore.

I created a class that doesn’t allow the creation of concurrent queries that exceed a specific limit and also caches data to a disk.

class Downloader:

cache_path = CACHE_PATH

attemps = 20

def __init__(self):

self.locker = asyncio.Semaphore(REQUESTS_LIMIT) # Creates a semaphore object

if not self.cache_path.exists():

self.cache_path.mkdir(parents=True)

async def start(self):

self.session = ClientSession(headers=HEADERS)

async def stop(self):

await self.session.close()

async def request(self, method, url, *args, **kwargs):

async with self.locker: # Here we use a semaphore. Not allowing to create queries greater than REQUESTS_LIMIT

for _ in range(self.attemps):

async with self.session.request(method, url, *args, **kwargs) as resp:

if resp.status == 200:

return await resp.read()

else:

await asyncio.sleep(2)

async def get(self, *args, **kwargs):

return await self.cache("GET", *args, **kwargs)

async def post(self, *args, **kwargs):

return await self.cache("POST", *args, **kwargs)

async def cache(self, method, url, *args, **kwargs):

url_hash = md5(url.encode()).hexdigest()

file_name = f"{url_hash}.cache"

file_path = Path(self.cache_path, file_name)

if file_path.exists():

async with aopen(file_path, 'rb') as f:

response_data = await f.read()

logger.info(f"Url {url} read from {file_name} cache")

else:

response_data = await self.request(method, url, *args, **kwargs)

if not response_data or b"Too Many Requests" in response_data:

raise Exception("Not Data")

async with aopen(file_path, 'wb') as f:

await f.write(response_data)

logger.info(f"Url {url} cached in {file_name} cache")

return response_dataCaching - Courtesy of the Web Parser

Why bother caching data to the disk?

Imagine you visited a page and got results. And then you noticed that you forgot to include 1 or more fields in the results. Then you need to restart the script. This takes time, creating a load on the site.

If you cache the data, instead of re-running your script on the website, you simply read data from your disk. That means the data is accessible any time, you can change result fields in the database and update the data very quickly - all of that without creating additional load for the site.

Of course, if you need to update the data regularly, you have to create a lifecycle mechanism for the cache. For example, if data has to be updated daily, then you have to remove all cache files from the previous day.

Optimization and launch

In my script, I used a limit of no more than 3 concurrent queries. Considering that I still need to save the data to the database, it gives pretty high performance even without proxy.

Each parser function either saves the results in the database or passes the data to the next function. I don’t have to worry about additional data synchronization, and I get a high competitive execution value, which generates a low memory and CPU load.

async def category_loading(self, page):

logger.info(f"Loading category page {page}")

url = CATEGORY_URL.format(page=page)

response_content = await self.downloader.get(url)

json_data = json.loads(response_content)

topics, more_topic = self.category_parser(json_data)

if more_topic:

next_page = int(page) + 1

asyncio.create_task(self.category_loading(next_page)) # I call the same function with the new parameter

for topic in topics:

asyncio.create_task(self.topic_loader(topic)) # I create tasks for parsing all the found topics. That's up to 30 new requestsA very interesting point worth paying attention to is the stop method.

async def stop(self):

while len(asyncio.all_tasks()) > 1: # The script will close when one task remains. The current function

logger.warning(f"Current tasks pool {len(asyncio.all_tasks())}")

await asyncio.sleep(10)

logger.warning(f"Current tasks pool {len(asyncio.all_tasks())}")

await self.downloader.stop()Since I use create_task everywhere, I don’t wait for tasks to complete. If I don’t check to see whether the tasks are done working in the event loop, my script will finish almost instantly. And that’s not the behavior I want to achieve.

The script is completed when there is only 1 task left because that task is the actual completion task. If I made my script terminate at 0, then my script would never stop

The function to start the scan has a very simple appearance as a result:

async def start_scan():

try:

mongo = MongoManage()

crowler = Crowler(db_manager=mongo)

await crowler.start(start_page=0)

await crowler.stop()

except Exception as e:

logger.exception(e)

finally:

await mongo.close()Results

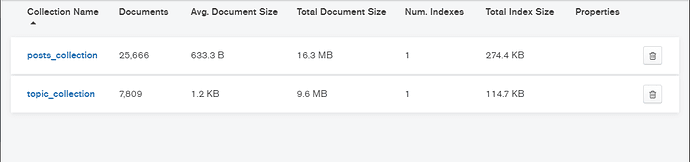

So we have 7,809 topics and 25,666 posts in them. In doing so, we have created a high-performance web parser. This statement is pretty trivial, but you have to follow all 3 steps to create scanners.

- Scanning Strategy

- Choice of technology

- Сoding

Never start with point 3. You’ll make life difficult for yourself and the site owners.

You can read the full version of the code on the GitHub repository - https://github.com/Mantisus/dataquests_tag_modelling